🔵 Features:

- S3 bucket setup and IAM credentials

- Django S3 integration using

boto3anddjango-storages - File Upload (via web + Postman)

- List Files

- Generate Download URL

- Delete Files

- Postman collection usage

🧱 1. AWS S3 Setup

Step 1: Create an S3 Bucket

- Go to AWS Console > S3 > Create Bucket

- Disable "Block all public access" if files should be publicly accessible.

[

{

"AllowedHeaders": ["*"],

"AllowedMethods": ["GET", "PUT", "POST", "DELETE"],

"AllowedOrigins": ["*"],

"ExposeHeaders": ["ETag"]

}

]Step 2: Set CORS Policy (optional)

Step 3: IAM User with S3 Access

- Create an IAM user with programmatic access

- Attach policy

AmazonS3FullAccess(for testing)

Save:

- Access Key ID

- Secret Access Key

⚙️ 2. Django Project Setup

Install Required Packages

pip install django boto3 django-storages python-decouplesettings.py

from decouple import config

INSTALLED_APPS += ['storages']

AWS_ACCESS_KEY_ID = config("AWS_ACCESS_KEY_ID")

AWS_SECRET_ACCESS_KEY = config("AWS_SECRET_ACCESS_KEY")

AWS_STORAGE_BUCKET_NAME = config("AWS_STORAGE_BUCKET_NAME")

AWS_S3_REGION_NAME = 'us-east-1' # update if needed

AWS_S3_FILE_OVERWRITE = False

AWS_DEFAULT_ACL = None

AWS_QUERYSTRING_AUTH = False

DEFAULT_FILE_STORAGE = 'storages.backends.s3boto3.S3Boto3Storage'.env

AWS_ACCESS_KEY_ID=your-key

AWS_SECRET_ACCESS_KEY=your-secret

AWS_STORAGE_BUCKET_NAME=your-bucketsettings.py (env support)

from decouple import config📂 3. File Upload View

forms.py

from django import forms

class UploadForm(forms.Form):

file = forms.FileField()views.py

from django.shortcuts import render, redirect

from django.http import JsonResponse

import boto3

from django.conf import settings

from .forms import UploadForm

def upload_file(request):

if request.method == 'POST':

form = UploadForm(request.POST, request.FILES)

if form.is_valid():

file = request.FILES['file']

s3 = boto3.client('s3')

s3.upload_fileobj(file, settings.AWS_STORAGE_BUCKET_NAME, file.name)

return JsonResponse({"message": "Upload successful", "filename": file.name})

return render(request, 'upload.html', {'form': UploadForm()})📋 4. List Files in S3

def list_files(request):

s3 = boto3.client('s3')

response = s3.list_objects_v2(Bucket=settings.AWS_STORAGE_BUCKET_NAME)

file_keys = [obj['Key'] for obj in response.get('Contents', [])]

return JsonResponse({"files": file_keys})📥 5. Generate Download URL (Presigned)

from django.http import JsonResponse

def download_file(request, filename):

s3 = boto3.client('s3')

url = s3.generate_presigned_url(

ClientMethod='get_object',

Params={'Bucket': settings.AWS_STORAGE_BUCKET_NAME, 'Key': filename},

ExpiresIn=3600

)

return JsonResponse({"url": url})🗑️ 6. Delete File

from django.shortcuts import redirect

def delete_file(request, filename):

s3 = boto3.client('s3')

s3.delete_object(Bucket=settings.AWS_STORAGE_BUCKET_NAME, Key=filename)

return JsonResponse({"message": f"{filename} deleted."})🌐 7. URLs Setup

urls.py

from django.urls import path

from . import views

urlpatterns = [

path('upload/', views.upload_file, name='upload_file'),

path('files/', views.list_files, name='list_files'),

path('download/<str:filename>/', views.download_file, name='download_file'),

path('delete/<str:filename>/', views.delete_file, name='delete_file'),

]📫 8. Postman Testing

1️⃣ Upload File (POST /upload/)

- Method: POST

- URL:

http://localhost:8000/upload/ - Body → form-data:

- Key:

file, Type: File, Choose a file

- Key:

- Expected Response:

{

"message": "Upload successful",

"filename": "example.pdf"

}2️⃣ List Files (GET /files/)

- Method: GET

- URL:

http://localhost:8000/files/ - Expected Response:

{

"files": ["example.pdf", "test.jpg"]

}3️⃣ Generate Download URL (GET /download/<filename>/)

- Method: GET

- URL:

http://localhost:8000/download/example.pdf - Expected Response:

{

"url": "https://bucket.s3.amazonaws.com/example.pdf?...signed"

}4️⃣ Delete File (GET /delete/<filename>/)

- Method: GET

- URL:

http://localhost:8000/delete/example.pdf - Expected Response:

{

"message": "example.pdf deleted."

}🛡️ 9. Security Tips

- Use

aws s3 presigned URLinstead of making files public - Store AWS credentials in

.envor use IAM roles in production - Set S3 bucket policy to restrict access by IP, path, or condition

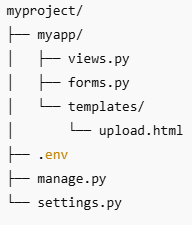

📁 Folder Structure (Recommended)